Log aggregation centrally oversees log management to simplify data analysis and file monitoring, helping enterprises increase operational efficiency and resource usage.

Organizations across all industries generate large volumes of unstructured and structured log data from networks, security devices, traffic monitoring systems, workstations, and servers - whether networked or not - which need to be aggregated for effective data analytics and forensic investigations.

What is log aggregation?

Log aggregation is the practice of gathering and consolidating log data in one central location for quick search and analysis by developers and security analysts.

No matter their industry, enterprises generate vast volumes of unstructured and structured log data from network devices, traffic monitoring systems, workstations, servers, and other sources. When this data is scattered across locations with various data formats, it becomes challenging to use for analysis and troubleshooting.

An efficient log aggregation solution should parse data so developers and security analysts can search more easily for specific errors while standardizing its format to simplify connecting information across various logs.

A good log aggregation platform should support multiple log types and retention policies, determining how long old logs should be kept and the optimal storage capacities for log data storage.

Once logs are collected and aggregated, they can be searched and analyzed in real-time to quickly pinpoint any issues requiring attention from IT operators, which significantly decreases mean time to resolution (MTTR) times while improving response times for critical IT operations.

Logs provide administrators, security analysts, and engineers with invaluable information in their roles as administrators, security analysts, and engineers. Logs help administrators quickly detect downtime, analyze system health and identify problems faster - saving organizations valuable time and resources in solving issues more rapidly.

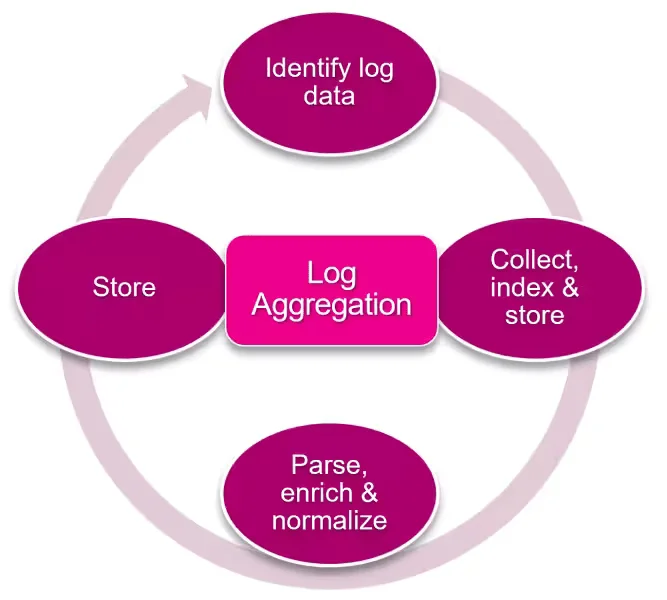

How does log aggregation work?

Log files are generated in every business, application, and infrastructure environment - they provide records detailing activities like who accessed a system or failed to process requests - but using traditional approaches would require sorting through hundreds of logs to pinpoint their source and understand where a problem originated.

Log aggregation works to collect disparate logs into one central location for tech pros to gain a comprehensive view of event data across distributed systems and applications.

In turn, this provides greater insight into application performance and quicker troubleshooting. Therefore, any organization should invest in a log aggregation solution capable of efficiently handling large volumes of data while offering fast search capabilities.

Various solutions can assist organizations in collecting and analyzing logs. One widely adopted option is the Elastic Stack: three tools comprising Elasticsearch, Logstash, and Kibana, which together form the foundation of an efficient log management solution capable of meeting both security and compliance needs.

Why is log aggregation important?

Troubleshooting complex systems is never simple, and sifting through large log files is often cumbersome and time-consuming. To troubleshoot successfully, you must locate files, understand data formats, search for errors, and connect information between logs.

As part of modern log management, aggregating log data from multiple sources is critical. Doing this allows IT teams to identify issues before they impact productivity, thus helping avoid downtime and revenue loss.

Aggregating log data can also assist security analysts in responding to attacks more swiftly and efficiently and provide insight into their systems' performance. This is key for detecting data breaches, complying with regulatory frameworks, and optimizing application performance.

Log management tools offer IT teams multiple time, energy, and money savings benefits. Instead of manually logging, IT teams can spend their time-solving problems rather than manually recording them.

Log aggregation is essential for organizations operating in a distributed environment with multiple applications, services, and infrastructures contributing to data logs. Lack of consistency across logs is often an obstacle.

As such, you must utilize a log management solution to aggregate all the logs from various systems into one searchable database for easy analysis and dashboard creation. With such a solution, users can perform free-text searches, complex RegEx queries for in-depth analysis, and dashboard-building operations.

What's Involved in Log Aggregation?

Log aggregation is collecting log data in one place for easy searching, which allows security teams to identify and address potential threats more effectively. At the same time, network administrators use log aggregation to detect issues in their infrastructure.

As technology evolves, enterprises produce increasingly unstructured and structured log data from various devices and systems within their infrastructure, which can take time to process and manage.

Aggregating logs is necessary for managing and analyzing vast amounts of data, especially as it's stored in various formats and accessed from numerous sources. Aggregation can be accomplished in various ways - for instance, by collecting logs on network devices, sending them to a central location for storage, and parsing them into an understandable format.

Logs may also be collected via a processing pipeline incorporating syslog daemons or agents that continuously ship, parse, and incoming index logs.

Tech pros can use a processing pipeline to manage logs with varied data formats more easily by mapping original timestamps and values to standardized key: value pairs such as dates, times, or status codes - this allows faster searches and more robust analysis.

Log aggregation can be pivotal in decreasing response times and helping IT staff identify problems before they cause significant downtime, potentially saving millions in lost revenues from outages.

What Types of Logs Should You Aggregate?

IT teams face one of the most significant performance-issue challenges: identifying and solving them before they affect user experience or company operations. Log data provides invaluable information that allows IT teams to improve infrastructure and applications while mitigating risks; additionally, it enables them to show customers they value them through increased service levels and decreased downtime.

Log aggregation solutions that can process various log types while providing insightful information for decision-makers in a timely and budget-conscious manner. Finding an effective solution to sort through gigabytes of data may take a lot of work, but choosing one will quickly bring success. Machine learning, data federation, and cloud storage solutions are features to look for. Aggregation may be most commonly associated with the application or system-level logs. Still, it can also analyze network traffic data to uncover security breaches and illicit activities. Furthermore, well-designed log aggregation solutions can be deployed worldwide for maximum scalability and security.

Features of a Log Aggregation Platform

Log aggregation platforms are software components designed to collect log information from network devices and computing systems, compress it, store it centralized for analysis, and utilize encryption at rest and in transit to protect sensitive data.

A centralized log management solution reduces the time-consuming task of manually inspecting various log files by standardizing data and making it easier to analyze, speeding up monitoring and detecting performance issues more quickly.

Log aggregation platforms offer users a centralized dashboard to identify and compare key metrics easily. This tool helps developers and security analysts better comprehend system health more quickly.

Log aggregation tools offer additional filters and complex searches to make troubleshooting processes more accessible and can correlate data from various sources, cloud environments, and applications to detect errors or hidden patterns within logging data.

Choose a log aggregation tool that automatically enriches incoming logs with metadata and additional information such as tags or trace IDs - this will allow security analysts to track threats more closely and improve the quality of their investigations.

Another advantage of consolidating log management strategies is providing audit trails for applications and components that deal with sensitive consumer data, which is essential when complying with PCI-DSS or HIPAA compliance regulations imposing stringent logging requirements.

As part of your log aggregation strategy, consider employing a log aggregation tool with real-time dashboarding and alerts so that security incidents or outages can be responded to swiftly by IT staff and minimize revenue lost through downtime. This could help speed up IT response time while protecting the revenue growth potential for your business.

FAQ Section

Log aggregation helps organizations gain insights into their infrastructure, detect anomalies, investigate security incidents, and troubleshoot issues more efficiently by providing a comprehensive view of system logs in one centralized location.

Log aggregation improves operational efficiency, enables faster troubleshooting, enhances security monitoring, aids in compliance adherence, and provides a historical record for auditing and analysis purposes.

Challenges may include handling a high volume of logs, managing log formats and structures, ensuring log integrity and security, and optimizing storage and performance to accommodate large-scale log data.

Yes, log aggregation simplifies compliance by providing a centralized log repository that can be easily searched and audited to meet regulatory requirements and demonstrate adherence to security standards.