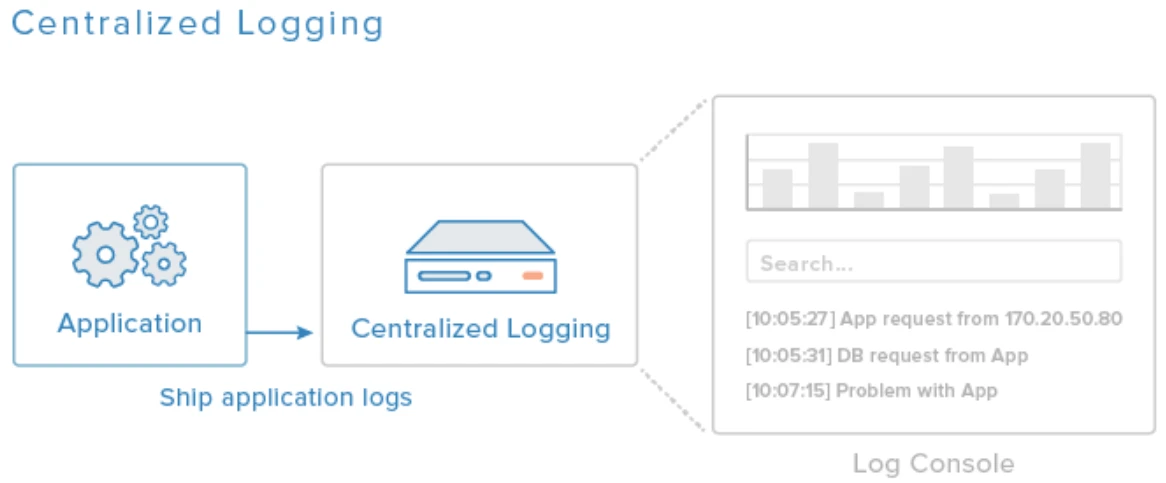

What is Centralized Logging?

Centralized logging is the process of collecting and storing log data from multiple systems, applications, and devices into a single, unified location. Instead of logs being scattered across servers, endpoints, and network tools, centralized logging consolidates them into one place where they can be easily searched, monitored, and analyzed. This method is essential for organizations that manage complex or distributed IT environments, especially those that rely heavily on cloud services, microservices, or hybrid infrastructure.

At its core, centralized logging simplifies the task of tracking system events and troubleshooting issues. When a problem occurs—whether it’s a failed login, a dropped packet, or an application crash—logs are the first place IT teams look to find out what happened. Without a centralized system, gathering those logs from different machines or cloud services can be time-consuming and error-prone. By pulling everything into a centralized repository, engineers and administrators gain immediate access to comprehensive insights that speed up diagnostics and resolution.

This approach also plays a crucial role in cybersecurity. Logs contain detailed records of user actions, system behaviors, and network events. Centralizing these logs helps security teams detect unusual activity, identify potential breaches, and perform forensic investigations more effectively. It’s not just about having the data—it’s about having the right data in the right place at the right time.

Centralized logging systems typically consist of three core components: log shippers or agents that collect data from various sources, a central server or cloud platform that stores the logs, and a user interface or dashboard that allows for querying and analysis. Popular tools used for centralized logging include the ELK Stack (Elasticsearch, Logstash, Kibana),Graylog, Fluentd, Splunk, and others. These tools offer features like real-time monitoring, alerting, visualizations, and integration with incident response systems.

In addition to operational benefits, centralized logging also supports compliance and audit requirements. Regulations like GDPR, HIPAA, and SOC 2 often require businesses to maintain detailed logs and retain them for a specific period. A centralized logging system makes it easier to meet these obligations and demonstrate accountability during audits.

Ultimately, centralized logging is a foundational practice for modern IT operations, helping teams gain visibility, reduce downtime, and respond faster to both technical and security challenges. As environments become more distributed and dynamic, the importance of centralized logging continues to grow.

Why Centralized Logging is Critical for Modern IT Environments

Centralized logging is critical for modern IT environments because of the growing complexity, scale, and security demands faced by today’s organizations. As businesses shift toward hybrid and multi-cloud infrastructures, containerized applications, and microservices architectures, the number of data sources generating logs has exploded. In such a distributed landscape, relying on manual or fragmented logging approaches leads to blind spots, slower response times, and missed opportunities to detect critical issues. Centralized logging provides a unified view of all system activity, enabling IT teams to manage operations more efficiently and securely.

One of the primary reasons centralized logging is so vital is its role in real-time visibility and rapid troubleshooting. When logs are scattered across various environments—local servers, cloud instances, virtual machines, and third-party services—it becomes difficult to trace the root cause of performance issues or outages. Centralized logging aggregates these logs into a single searchable location, helping teams quickly identify patterns, track down errors, and resolve problems before they escalate. This reduces downtime, improves system reliability, and ensures a smoother user experience.

Security is another major driver. Modern threats are more sophisticated, often leaving traces in log files that might otherwise go unnoticed. Centralized logging enables continuous monitoring and correlation of security events across all endpoints, firewalls, authentication systems, and applications. This makes it easier to detect anomalies, flag unauthorized access attempts, and respond to potential breaches in a timely manner. It also allows security analysts to conduct more effective forensic investigations by having a complete record of what happened and when.

Compliance and audit requirements further underscore the importance of centralized logging. Organizations in regulated industries—such as healthcare, finance, and government—are required to retain logs, track user activity, and demonstrate control over their systems. A centralized logging system helps fulfill these requirements by automating log retention, ensuring log integrity, and making it easier to generate audit trails when needed.

Additionally, centralized logging supports scalability and automation. As organizations grow, so do their IT footprints. Manual log management simply doesn’t scale. Centralized systems can ingest millions of log entries per second, apply filters, generate alerts, and trigger automated workflows. This allows DevOps, SecOps, and IT teams to keep pace with demand while maintaining control.

Key Benefits of Centralized Logging Systems

Centralized logging systems offer a wide range of benefits that significantly improve the efficiency, security, and reliability of modern IT environments. By consolidating logs from across the infrastructure into a single platform, organizations gain better control over their data and can make faster, more informed decisions. Whether you’re managing a small network or a complex, distributed cloud architecture, centralized logging provides the foundation for streamlined operations and proactive incident response.

One of the most important benefits of centralized logging is enhanced visibility. When logs are collected from various sources—servers, applications, cloud platforms, firewalls, and endpoints—they paint a complete picture of what’s happening across your systems. This comprehensive view makes it easier to identify trends, spot anomalies, and correlate events that may have gone unnoticed in a fragmented logging setup. With all logs centralized, IT teams can quickly search and analyze data in one place without having to log in to multiple systems.

Another key advantage is faster troubleshooting and root cause analysis. When issues arise, every second counts. Centralized logging helps teams pinpoint the exact source of a problem by aggregating related log entries from different services or machines. This speeds up resolution times, minimizes downtime, and ensures more consistent performance across critical systems. Instead of manually combing through logs on multiple servers, teams can use search queries, filters, and dashboards to zero in on the issue.

Centralized logging also supports stronger security postures. Log data is often the first place where evidence of a cyberattack appears—failed logins, privilege escalations, suspicious file access, and unusual network activity. By centralizing this data, security teams can set up alerts, monitor patterns in real-time, and respond more quickly to threats. It also simplifies forensic analysis in the aftermath of an incident, providing a single source of truth that helps reconstruct the attack timeline.

From a compliance standpoint, centralized logging makes it easier to meet industry regulations and standards. Frameworks like HIPAA, PCI-DSS, and SOC 2 require robust logging practices and data retention policies. Centralized systems often include built-in features to automate log collection, enforce retention rules, and maintain tamper-proof records—reducing the risk of audit failures and non-compliance penalties.

Finally, centralized logging supports automation and operational efficiency. With logs flowing into one system, organizations can apply machine learning, correlation rules, and integrations with incident management tools to detect problems before they escalate. This enables proactive IT management and creates a more resilient infrastructure. Overall, the benefits of centralized logging extend far beyond convenience—it’s a strategic investment in performance, security, and control.

Common Tools Used for Centralized Logging

There are several powerful tools available for centralized logging, each offering unique features and capabilities to help organizations manage, analyze, and visualize log data from various sources. These tools are designed to collect logs from across an infrastructure—servers, applications, containers, network devices, and cloud environments—and centralize them into a single platform for streamlined operations, better visibility, and faster incident response. Choosing the right tool often depends on the specific needs of your environment, such as scalability, ease of use, integration options, and budget.

One of the most widely used centralized logging solutions is the ELK Stack, which stands for Elasticsearch, Logstash, and Kibana. Elasticsearch acts as the search and analytics engine, Logstash collects and processes the logs, and Kibana provides the visualization layer. This open-source stack is highly customizable and scalable, making it ideal for organizations with complex or high-volume logging needs. It supports real-time log ingestion, filtering, and dashboard creation, which helps DevOps and security teams gain actionable insights quickly.

Another popular option is Graylog, a log management platform built on top of Elasticsearch and MongoDB. Graylog simplifies the process of collecting, indexing, and analyzing log data, with a strong focus on user-friendly dashboards and alerting features. It's often favored for its balance of power and ease of use, as well as its ability to support large-scale environments with minimal overhead.

Splunk is a commercial solution known for its enterprise-grade features and robust analytics capabilities. It can ingest large volumes of log data and apply advanced machine learning models for anomaly detection, predictive analytics, and automated alerting. Splunk’s strength lies in its extensive integrations, scalability, and ability to handle structured and unstructured data from diverse sources. While it’s more expensive than open-source alternatives, its feature-rich platform is often worth the investment for larger organizations.

Fluentd and Fluent Bit are also widely used log collectors, especially in cloud-native and containerized environments. These lightweight tools are highly efficient at collecting, transforming, and forwarding log data to centralized storage solutions such as Elasticsearch, AWS CloudWatch, or Google Cloud Logging. Fluentd is often integrated into Kubernetes logging pipelines due to its low resource consumption and extensibility.

Other tools worth mentioning include Loggly, Papertrail, Sumo Logic, and Datadog, which offer cloud-based centralized logging services with simple setup and built-in dashboards. These are ideal for teams looking for fast deployment and minimal infrastructure management.

Ultimately, the choice of tool depends on your environment’s complexity, compliance needs, and technical expertise. A centralized logging solution should not only gather logs but also provide actionable insights, reduce response times, and support the ongoing growth and evolution of your IT ecosystem.

Centralized Logging vs Decentralized Logging

Understanding the difference between centralized logging and decentralized logging is essential for selecting the right approach to manage system logs effectively. While both methods involve capturing logs from various sources, they differ in how those logs are stored, accessed, and analyzed—each with its own set of advantages and challenges. As IT infrastructures become more distributed and complex, making an informed decision between centralized and decentralized logging can have a significant impact on performance, security, and operational efficiency.

Centralized logging refers to the practice of collecting log data from all systems, servers, applications, and network devices into a single, centralized platform. This setup allows IT and security teams to access all logs from one location, making it easier to monitor system behavior, troubleshoot issues, and correlate events across the entire environment. Tools like the ELK Stack, Splunk, and Graylog are commonly used for this purpose. Centralized logging simplifies log management, provides comprehensive visibility, and supports compliance by offering consistent retention and access policies.

In contrast, decentralized logging involves storing and managing logs separately on each system or application where they originate. This method may work well in smaller or isolated environments where only a few servers are involved and centralized infrastructure is unnecessary. However, as environments scale, decentralized logging quickly becomes inefficient. Troubleshooting requires logging into multiple systems, making it time-consuming to gather and correlate relevant data. There’s also a higher risk of missing critical events, especially during security incidents, because logs are fragmented and harder to track in real-time.

One of the key advantages of centralized logging over decentralized logging is the ability to run cross-system queries. This capability is crucial for detecting complex issues or security threats that span multiple systems. Centralized platforms can also apply automated alerts, dashboards, and analytics, helping teams respond faster and more proactively.

That said, centralized logging does come with considerations such as storage requirements, initial setup complexity, and potential single points of failure if not properly architected. On the other hand, decentralized logging may offer greater resilience in isolated systems and reduced upfront costs, but it sacrifices efficiency, consistency, and visibility.

In today’s cloud-native, microservices-driven environments, centralized logging is generally the preferred choice. It provides the scale, control, and insight needed to keep modern systems secure, stable, and high-performing. While decentralized logging may still have limited use cases, its effectiveness diminishes rapidly as complexity and security requirements grow. For organizations aiming for operational excellence, centralized logging delivers a clear advantage.

Best Practices for Implementing Centralized Logging

Implementing centralized logging effectively requires more than just choosing the right tool—it demands a strategic approach that ensures logs are collected consistently, stored securely, and used efficiently for monitoring, troubleshooting, and compliance. Without the right practices in place, even the most advanced logging systems can become disorganized, noisy, or difficult to scale. Following best practices helps organizations get the most value out of their centralized logging solution while minimizing performance issues and operational risks.

One of the first best practices is defining what to log and why. Not every piece of data needs to be captured, and excessive logging can lead to unnecessary storage costs and noise. Focus on collecting logs that are critical for operations, security, and compliance. This typically includes system events, application errors, access logs, authentication attempts, configuration changes, and network traffic. Establish clear log retention policies based on regulatory requirements and business needs to avoid keeping unnecessary data.

Standardizing log formats is another essential step. Logs should follow a structured format—such as JSON or key-value pairs—that makes it easy to parse and analyze data consistently across different sources. When logs follow a consistent schema, they can be more easily ingested by tools like Elasticsearch or parsed by log shippers like Logstash and Fluentd. This structure enables more accurate filtering, searching, and correlation, especially when dealing with high volumes of data.

Security must also be a key focus. Logs often contain sensitive information, such as IP addresses, usernames, and system configurations. Make sure log data is encrypted both in transit and at rest. Implement access controls so that only authorized users can view or modify log entries. Additionally, enable log integrity verification to ensure that logs haven’t been tampered with—especially if you’re using them for forensic or compliance purposes.

Another important best practice is setting up real-time alerts and dashboards. Simply storing logs is not enough—teams need to be notified immediately when certain thresholds are reached or suspicious behaviors are detected. Configure alerts for failed logins, unauthorized access, system errors, or performance degradation. Visualization dashboards can also help teams monitor trends and system health at a glance.

Finally, test and review your centralized logging setup regularly. Validate that logs are being captured correctly from all systems and that alerting rules are functioning as intended. As your infrastructure evolves, update your logging architecture to reflect new applications, containers, or cloud services. This ongoing review ensures your logging solution remains effective and scalable over time.

By following these best practices—focused logging, structured formats, strong security, proactive monitoring, and regular audits—organizations can build a centralized logging system that delivers meaningful insights, supports compliance, and enhances overall IT performance.

Centralized Logging for Compliance and Auditing

Centralized logging plays a crucial role in helping organizations meet compliance requirements and prepare for audits. As regulations across industries continue to tighten—driven by concerns over data privacy, cybersecurity, and accountability—maintaining a complete and verifiable log of system activity is no longer optional. Whether a company operates in healthcare, finance, education, or government, having a centralized logging system is essential for demonstrating control, detecting violations, and responding effectively to regulatory inquiries.

One of the primary compliance benefits of centralized logging is that it creates a single, reliable source of truth for all system, network, and user activity. Logs are often required to track access to sensitive data, monitor user actions, and record changes to configurations or critical systems. By consolidating logs in one place, organizations can ensure that they have consistent records that are easy to retrieve, search, and correlate during internal reviews or external audits. This level of transparency is key to demonstrating compliance with regulations like HIPAA, GDPR, PCI-DSS, SOX, and SOC 2.

Centralized logging also helps enforce log retention policies. Many compliance standards require that logs be retained for specific timeframes—ranging from several months to several years. A centralized system allows organizations to automate these retention policies, ensuring that critical data is preserved for as long as needed and securely deleted when appropriate. This reduces the risk of both non-compliance and unnecessary storage costs.

In addition, centralized logging enables better access control and integrity management. For compliance purposes, it’s important not only to store logs, but also to protect them from unauthorized access and tampering. Centralized platforms often include features like role-based access controls, encryption, and tamper-evident storage, all of which are essential for maintaining the chain of custody for audit logs. These controls ensure that logs can be trusted as accurate records of activity.

When it comes time for an audit, having a centralized logging system can dramatically simplify the process. Instead of pulling data from multiple systems, teams can generate detailed reports from a single interface. Auditors can quickly access relevant records, trace the timeline of an incident, and verify that security controls are being enforced. This improves audit readiness and reduces the stress and labor involved in proving compliance.

Overall, centralized logging is not just a technical best practice—it’s a foundational requirement for any organization subject to regulatory oversight. It improves visibility, strengthens accountability, and ensures that you’re always prepared to demonstrate compliance with industry standards and legal obligations.